For the last 18 months I’ve been obsessively testing every new voice AI stack I can get my hands on. English builders have it easy: plenty of commercial ASR, LLM, and TTS services now push total round-trip time well under one second.

The moment you venture into lower-resource languages—Arabic included—latency balloons past 1.5 s, sometimes 2 s+.

End-to-end (E2E) speech models promise to fix this by collapsing the classic three-tier pipeline (ASR → LLM → TTS) into a single model that speaks and listens concurrently. OpenAI set the tone with GPT-4o Realtime, Google Gemini, Amazon Nova followed, and Ultravox became the first vendor to open-source a truly real-time multimodal model.

Why focus on Ultravox?

- Open-source weights let you self-host for maximum control and privacy.

- Their cloud API is production-ready, so you can start fast and migrate on-prem later.

- Built-in neural VAD (voice-activity detection) means no external turn detector is required

I’ve recently started exploring implementations for integrating Ultravox with some of the most widely used voice agent frameworks. Pipecat, for example, offers an Ultravox service, but unfortunately, the current Pipecat <-> Ultravox integration doesn’t support tool use or function calls, making it suitable only for a limited range of scenarios. For most voice AI agents today….. this limitation is a significant deal breaker.

Why End-to-End Speech Models Matter

Traditional voice agents use a cascaded approach:

Audio Input → ASR → Text → LLM → Text Response → TTS → Audio Output

Each step adds latency:

- ASR: 200-400ms

- LLM inference: 300-800ms

- TTS: 200-400ms

- Network overhead: 100-200ms per API call

Total: 1.5-2.5 seconds (or even more sometimes)

End-to-end models process speech directly:

Audio Input → Speech Model → Audio Output

By streamlining the architecture into a single end-to-end model, voice agents can bypass the bottlenecks of a three-tier system. This achieves a sub-1000ms latency ( < 600 ms if deployed locally) for more fluid, natural-feeling conversations. Additionally, it also enriches the LLM’s (or maybe SLM in this case?) understanding by providing it with the full tonal and emotional context of raw audio, rather than just a flat text transcription with no audio context

Implementation Notes:

Using Ultravox’s websockets protocol (see documentation here) provides a good base for server-to-server integrations, I’ve found that LiveKit’s base class ‘RealtimeModel’ was already written for GPT-4o realtime which has already existed for a few months now …. this made things a lot easier as I had pre-existing reference to look at when needed

I’ve decided to ditch the turn-detector plugin that’s built into LiveKit Agents in favour on Ultravox’s Neural VAD, Ultravox operates as an end-to-end speech-to-speech model with built-in Neural VAD that understands conversational context, it’s descriptions reads “the system analyzes 32ms audio frames and incorporates language understanding to avoid premature interruptions ’ …. Operating on audio input directly seems to be a better option rather than the text-based approach that LiveKit’s turn detector plugin currently uses

You can check the realease status of this plugin at the following PR : https://github.com/livekit/agents/pull/2992

Latency Figures

Using this newly developed plugin, I’ve implemented it in an arabic voice agent which is basically a translated version of the agent file in the /examples directory of the above PR. Only the tool definitons were not translated as I’ve found that this negatively impacts tool calling performance

So I’ve spun up this LiveKit voice agent on a $5 Hetnzer VM , and went to LiveKit’s playground to test how it performs

I did expect to get some good TTFB numbers, which was the case here

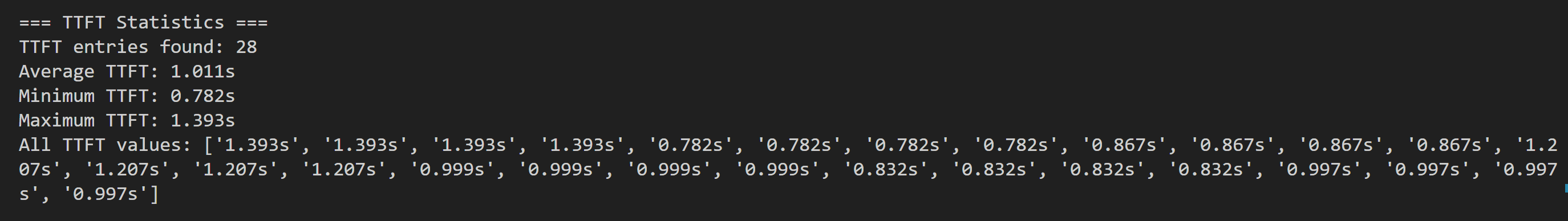

Measured on 7 turns, 5 of which included function calls (ignore the # 28, bug in log extraction script)

Current Limitations

However, It did really struggle with number and dates, when it tried to say a specific number or dates…. it clear pronounces any sort of numerals incorrectly…. as you can hear here :

Numbers and dates are the Achilles’ heel. The model consistently mangles Arabic numerals and calendar phrases, as you can hear for yourself in the audio clip above. Until this is fixed I would not ship Ultravox Arabic in a production voice agent.

While the latest version ( Ultravox v0.6 ) should address some of these limitations…. my guess is that performance would be largerly unchaged on Arabic.

Next Steps

Luckily Ultravox is an open-source model, which means that we can fine tune it to improve it’s performance on a specific language or in a specifc domain… The OS version of this model differs from the API is that it outputs text , but it still has the multimodal projector that converts audio directly into the high-dimensional space used by the LLM backbone

As a side bonus: hosting this locally would even yield better TTFT figures, last time I did host it on Cerebrium I was getting 600ms E2E latency where Ultravox took around 350ms and Cartesia’s Sonic-2 took 250ms… this is the time taken for the individual models and doesn’t take into account network/transport and other factors

I’ll be fine tuning this model to impove it’s performance on arabic and combining it with an arabic-capable TTS to see how it performs.

I firmly believe that speech-to-speech models are the next up-and-coming frontier of voice agents, and I have high hopes for them. The path forward requires us to tackle their current limitations head-on. As a community of developers and researchers, let’s see how we will adjust for this lack of performance on numbers and dates, as well as their shortcomings with the current observability stacks, to push the boundaries of what’s possible and unlock truly natural and human-like voice interactions.